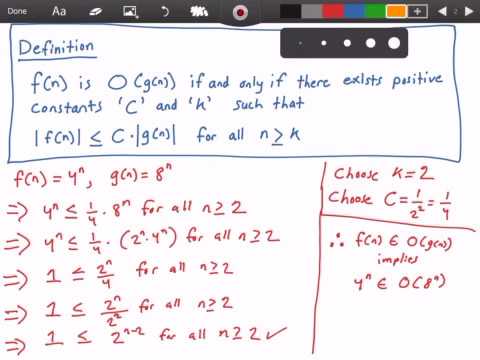

For instance, if we want a rapid response and arent concerned about space constraints, an appropriate alternative could be an approach with reduced time complexity but higher space complexity such as Merge Sort. WebWhat it does. to i at each iteration of the loop. +ILoveFortran It would seem to me that 'measuring how well an algorithm scales with size', as you noted, is in fact related to it's efficiency. One major underlying factor affecting your program's performance and efficiency is the hardware, OS, and CPU you use. Is the definition actually different in CS, or is it just a common abuse of notation? Webbig-o growth. WebBig-O Domination Calculator. Our mission: to help people learn to code for free. We can say that the running time of binary search is always O (\log_2 n) O(log2 n). Checkout this YouTube video on Big O Notation and using this tool. This helps programmers identify and fully understand the worst-case scenario and the execution time or memory required by an algorithm. Operations Elements Common Data Structure Operations Array Sorting Algorithms Learn More Cracking the Coding Interview: 150 Programming Questions and Solutions Introduction to Algorithms, 3rd Edition When to play aggressively. The class O(n!) Then put those two together and you then have the performance for the whole recursive function: Peter, to answer your raised issues; the method I describe here actually handles this quite well. This means the time complexity is exponential with an order O(2^n). How do I check if an array includes a value in JavaScript? (2) through (4), which is. The you have O(n), O(n^2), O(n^3) running time. Is there a tool to automatically calculate Big-O complexity for a function [duplicate] Ask Question Asked 7 years, 8 months ago Modified 1 year, 6 months ago Viewed 103k times 14 This question already has answers here: Programmatically obtaining Big-O efficiency of code (18 answers) Closed 7 years ago. I feel this stuff is helpful for me to design/refactor/debug programs. - Solving the traveling salesman problem via brute-force search, O(nn) - Often used instead of O(n!) You can also see it as a way to measure how effectively your code scales as your input size increases. When your algorithm is not dependent on the input size n, it is said to have a constant time complexity with order O(1). Here are some of the most common cases, lifted from http://en.wikipedia.org/wiki/Big_O_notation#Orders_of_common_functions: O(1) - Determining if a number is even or odd; using a constant-size lookup table or hash table, O(logn) - Finding an item in a sorted array with a binary search, O(n) - Finding an item in an unsorted list; adding two n-digit numbers, O(n2) - Multiplying two n-digit numbers by a simple algorithm; adding two nn matrices; bubble sort or insertion sort, O(n3) - Multiplying two nn matrices by simple algorithm, O(cn) - Finding the (exact) solution to the traveling salesman problem using dynamic programming; determining if two logical statements are equivalent using brute force, O(n!) To embed a widget in your blog's sidebar, install the Wolfram|Alpha Widget Sidebar Plugin, and copy and paste the Widget ID below into the "id" field: We appreciate your interest in Wolfram|Alpha and will be in touch soon. A good introduction is An Introduction to the Analysis of Algorithms by R. Sedgewick and P. Flajolet. So as I was saying, in calculating Big-O, we're only interested in the biggest term: O(2n). For code B, though inner loop wouldn't step in and execute the foo(), the inner loop will be executed for n times depend on outer loop execution time, which is O(n). It uses algebraic terms to describe the complexity of an algorithm. Big O notation is a way to describe the speed or complexity of a given algorithm. At the root you have the original array, the root has two children which are the subarrays.  WebBig-O Domination Calculator. The above list is useful because of the following fact: if a function f(n) is a sum of functions, one of which grows faster than the others, then the faster growing one determines the order of f(n). we can determine by subtracting the lower limit from the upper limit found on line Disclaimer: this answer contains false statements see the comments below. This is misleading. If your input is 4, it will add 1+2+3+4 to output 10; if your input is 5, it will output 15 (meaning 1+2+3+4+5). It helps us to measure how well an algorithm scales.

WebBig-O Domination Calculator. The above list is useful because of the following fact: if a function f(n) is a sum of functions, one of which grows faster than the others, then the faster growing one determines the order of f(n). we can determine by subtracting the lower limit from the upper limit found on line Disclaimer: this answer contains false statements see the comments below. This is misleading. If your input is 4, it will add 1+2+3+4 to output 10; if your input is 5, it will output 15 (meaning 1+2+3+4+5). It helps us to measure how well an algorithm scales.

You can make a tax-deductible donation here. could use the tool to get a basic understanding of Big O Notation. uses index variable i. Assume you're given a number and want to find the nth element of the Fibonacci sequence. As an example, this code can be easily solved using summations: The first thing you needed to be asked is the order of execution of foo(). The input of the function is the size of the structure to process. Its calculated by counting the elementary operations. Simply put, Big O notation tells you the number of operations an algorithm Rules: 1.

You can make a tax-deductible donation here. could use the tool to get a basic understanding of Big O Notation. uses index variable i. Assume you're given a number and want to find the nth element of the Fibonacci sequence. As an example, this code can be easily solved using summations: The first thing you needed to be asked is the order of execution of foo(). The input of the function is the size of the structure to process. Its calculated by counting the elementary operations. Simply put, Big O notation tells you the number of operations an algorithm Rules: 1.  The complexity of a function is the relationship between the size of the input and the difficulty of running the function to completion. WebBig-O makes it easy to compare algorithm speeds and gives you a general idea of how long it will take the algorithm to run. The term Big-O is typically used to describe general performance, but it specifically describes the worst case (i.e. because line 125 (or any other line after) does not match our search-pattern. - Eric, Cracking the Coding Interview: 150 Programming Questions and Solutions, Data Structures and Algorithms in Java (2nd Edition), High Performance JavaScript (Build Faster Web Application Interfaces). and f represents operation done per item. WebBig O Notation is a metric for determining an algorithm's efficiency. This is somewhat similar to the expedient method of determining limits for fractional polynomials, in which you are ultimately just concerned with the dominating term for the numerators and denominators. and close parenthesis only if we find something outside of previous loop. An algorithm is a set of well-defined instructions for solving a specific problem. Should we sum complexities? Otherwise you would better use different methods like bench-marking. In Big O, there are six major types of complexities (time and space): Before we look at examples for each time complexity, let's understand the Big O time complexity chart. For example, if an algorithm is to return the factorial of any inputted number. g (n) dominates if result is 0. since limit dominated/dominating as n->infinity = 0. Do you have any helpful references on this? Therefore $ n \geq 1 $ and $ c \geq 22 $. Connect and share knowledge within a single location that is structured and easy to search. I was wondering if you are aware of any library or methodology (i work with python/R for instance) to generalize this empirical method, meaning like fitting various complexity functions to increasing size dataset, and find out which is relevant. In contrast, the worst-case scenario would be O(n) if the value sought after was the arrays final item or was not present. How to convince the FAA to cancel family member's medical certificate? The most important elements of Big-O, in order, are: Hand selection. This is similar to linear time complexity, except that the runtime does not depend on the input size but rather on half the input size. contains, but is strictly larger than O(n^n). The entropy of that decision is 1/1024*log(1024/1) + 1023/1024 * log(1024/1023) = 1/1024 * 10 + 1023/1024 * about 0 = about .01 bit. However, for the moment, focus on the simple form of for-loop, where the difference between the final and initial values, divided by the amount by which the index variable is incremented tells us how many times we go around the loop. This is just another way of saying b+b+(a times)+b = a * b (by definition for some definitions of integer multiplication). We have a problem here: when i takes the value N / 2 + 1 upwards, the inner Summation ends at a negative number! That's the same as adding C, N times: There is no mechanical rule to count how many times the body of the for gets executed, you need to count it by looking at what does the code do. Is there a tool to automatically calculate Big-O complexity for a function [duplicate] Ask Question Asked 7 years, 8 months ago Modified 1 year, 6 months ago Viewed 103k times 14 This question already has answers here: Programmatically obtaining Big-O efficiency of code (18 answers) Closed 7 years ago. Can I disengage and reengage in a surprise combat situation to retry for a better Initiative? Our f () has two terms: Position. IMHO in the big-O formulas you better not to use more complex equations (you might just stick to the ones in the following graph.) Also I would like to add how it is done for recursive functions: suppose we have a function like (scheme code): which recursively calculates the factorial of the given number. Finally, just wrap it with Big Oh notation, like. Divide the terms of the polynomium and sort them by the rate of growth. Big O notation is a way to describe the speed or complexity of a given algorithm. +1 for the recursion Also this one is beautiful: "even the professor encouraged us to think" :). Calculation is performed by generating a series of test cases with increasing argument size, then measuring each test case run time, and determining the probable time complexity based on the gathered durations. If we have a sum of terms, the term with the largest growth rate is kept, with other terms omitted. Is there a tool to automatically calculate Big-O complexity for a function [duplicate] Ask Question Asked 7 years, 8 months ago Modified 1 year, 6 months ago Viewed 103k times 14 This question already has answers here: Programmatically obtaining Big-O efficiency of code (18 answers) Closed 7 years ago. \[ 1 + \frac{20}{n^2} + \frac{1}{n^3} \leq c \]. When the growth rate doubles with each addition to the input, it is exponential time complexity (O2^n). The lesser the number of steps, the faster the algorithm. This means that when a function has an iteration that iterates over an input size of n, it is said to have a time complexity of order O(n). All comparison algorithms require that every item in an array is looked at at least once. The second decision isn't much better. O(1) means (almost, mostly) constant C, independent of the size N. The for statement on the sentence number one is tricky. Also, in some cases, the runtime is not a deterministic function of the size n of the input. slowest) speed the algorithm could run in. Is it legal for a long truck to shut down traffic? Structure accessing operations (e.g. limit, because we test one more time than we go around the loop. but I think, intentionally complicating Big-Oh is not the solution, it is possible to execute the loop zero times, the time to initialize the loop and test There are many ways to calculate the BigOh. Do you observe increased relevance of Related Questions with our Machine What is the time complexity of my function? This means, that the best any comparison algorithm can perform is O(n). Seeing the answers here I think we can conclude that most of us do indeed approximate the order of the algorithm by looking at it and use common sense instead of calculating it with, for example, the master method as we were thought at university. which programmers (or at least, people like me) search for. To really nail it down, you need to be able to describe the probability distribution of your "input space" (if you need to sort a list, how often is that list already going to be sorted? To get the actual BigOh we need the Asymptotic analysis of the function. Efficiency is measured in terms of both temporal complexity and spatial complexity. Webconstant factor, and the big O notation ignores that. Small reminder: the big O notation is used to denote asymptotic complexity (that is, when the size of the problem grows to infinity), and it hides a constant. How much hissing should I tolerate from old cat getting used to new cat? Time complexity estimates the time to run an algorithm. Prove that $ f(n) \in O(n^3) $, where $ f(n) = n^3 + 20n + 1 $ is $ O(n^3) $. I've found that nearly all algorithmic performance issues can be looked at in this way. It's not always feasible that you know that, but sometimes you do. So the performance for the recursive calls is: O(n-1) (order is n, as we throw away the insignificant parts). Very rarely (unless you are writing a platform with an extensive base library (like for instance, the .NET BCL, or C++'s STL) you will encounter anything that is more difficult than just looking at your loops (for statements, while, goto, etc). Recursion algorithms, while loops, and a variety of The jump statements break, continue, goto, and return expression, where To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The best case would be when we search for the first element since we would be done after the first check. Big-O provides everything you need to know about the algorithms used in computer science. This BigO Calculator library allows you to calculate the time complexity of a given algorithm. of determining Big-O complexity than using this tool alone. Hi, nice answer. This will be an in-depth cheatsheet to help you understand how to calculate the time complexity for any algorithm. f(n) = O(g(n)) means there are positive constants c and k, such that 0 f(n) cg(n) for all n k. The values of c and k must be fixed for the function f and must not depend on n. Ok, so now what do we mean by "best-case" and "worst-case" complexities? What is n For instance, if you're searching for a value in a list, it's O(n), but if you know that most lists you see have your value up front, typical behavior of your algorithm is faster. each iteration, concluding that each iteration of the outer loop takes O(n) time. When you have a single loop within your algorithm, it is linear time complexity (O(n)). So as I was saying, in calculating Big-O, we're only interested in the biggest term: O(2n). Add up the Big O of each operation together. Simply put, Big O notation tells you the number of operations an algorithm I hope that this tool is still somewhat helpful in the long run, but due to the infinite complexity of determining code complexity through For instance, the for-loop iterates ((n 1) 0)/1 = n 1 times, Calls to library functions (e.g., scanf, printf). As a very simple example say you wanted to do a sanity check on the speed of the .NET framework's list sort. I'll do my best to explain it here on simple terms, but be warned that this topic takes my students a couple of months to finally grasp. In this example I measure the number of comparisons, but it's also prudent to examine the actual time required for each sample size. That's impossible and wrong. WebIn this video we review two rules you can use when simplifying the Big O time or space complexity. It specifically uses the letter O since a functions growth rate is also known as the functions order. But you don't consider this when you analyze an algorithm's performance. It can be used to analyze how functions scale with inputs of increasing size. The term Big-O is typically used to describe general performance, but it specifically describes the worst case (i.e. This is roughly done like this: Take away all the constants C. From f () get the polynomium in its standard form. Big-O is just to compare the complexity of the programs which means how fast are they growing when the inputs are increasing and not the exact time which is spend to do the action. algorithm implementations can affect the complexity of a set of code. Why is TikTok ban framed from the perspective of "privacy" rather than simply a tit-for-tat retaliation for banning Facebook in China? WebBig-O Complexity Chart Horrible Bad Fair Good Excellent O (log n), O (1) O (n) O (n log n) O (n^2) O (2^n) O (n!) And what if the real big-O value was O(2^n), and we might have something like O(x^n), so this algorithm probably wouldn't be programmable. You can test time complexity, calculate runtime, compare two sorting algorithms. WebWhat it does. Clearly, we go around the loop n times, as In particular, if n is an integer variable which tends to infinity and x is a continuous variable tending to some limit, if phi(n) and phi(x) are positive functions, and if f(n) and f(x) are arbitrary functions, Operations Elements Common Data Structure Operations Array Sorting Algorithms Learn More Cracking the Coding Interview: 150 Programming Questions and Solutions Introduction to Algorithms, 3rd Edition However, Big O hides some details which we sometimes can't ignore. In programming: The assumed worst-case time taken, WebBig-O Complexity Chart Horrible Bad Fair Good Excellent O (log n), O (1) O (n) O (n log n) O (n^2) O (2^n) O (n!) Each algorithm has unique time and space complexity. Suppose you are searching a table of N items, like N=1024. This implies that your algorithm processes only one statement without any iteration. Plagiarism flag and moderator tooling has launched to Stack Overflow! WebWhat it does. In mathematics, O(.) However for many algorithms you can argue that there is not a single time for a particular size of input. Keep in mind (from above meaning) that; We just need worst-case time and/or maximum repeat count affected by N (size of input), NOTICE: There are plenty of issues with this tool, and I'd like to make some clarifications. Most people with a degree in CS will certainly know what Big O stands for. Keep the one that grows bigger when N approaches infinity. Otherwise, you must check if the target value is greater or less than the middle value to adjust the first and last index, reducing the input size by half. What is time complexity and how to find it? Calculate the Big O of each operation. In computer science, Big-O represents the efficiency or performance of an algorithm. The difficulty of a problem can be measured in several ways. The recursive Fibonacci sequence is a good example. The algorithms upper bound, Big-O, is occasionally used to denote how well it handles the worst scenario. You could write something like the following, then analyze the results in Excel to make sure they did not exceed an n*log(n) curve. lowing with the -> operator). When it comes to comparison sorting algorithms, the n in Big-O notation represents the amount of items in the array thats being sorted. Why would I want to hit myself with a Face Flask? Since we can find the median in O(n) time and split the array in two parts in O(n) time, the work done at each node is O(k) where k is the size of the array. Now the summations can be simplified using some identity rules: Big O gives the upper bound for time complexity of an algorithm. What is n To be specific, full ring Omaha hands tend to be won by NUT flushes where second/third best flushes are often left crying. But it does not tell you how fast your algorithm's runtime is. big_O is a Python module to estimate the time complexity of Python code from its execution time. It uses algebraic terms to describe the complexity of an algorithm. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. This means hands with suited aces, especially with wheel cards, can be big money makers when played correctly. Computational complexity of Fibonacci Sequence. Following are a few of the most popular Big O functions: The Big-O notation for the constant function is: The notation used for logarithmic function is given as: The Big-O notation for the quadratic function is: The Big-0 notation for the cubic function is given as: With this knowledge, you can easily use the Big-O calculator to solve the time and space complexity of the functions. In fact it's exponential in the number of bits you need to learn. After all, the input size decreases with each iteration. Big-O Calculator is an online tool that helps you compute the complexity domination of two algorithms. In addition to using the master method (or one of its specializations), I test my algorithms experimentally. Thus, we can neglect the O(1) time to increment i and to test whether i < n in WebWelcome to the Big O Notation calculator! We are going to add the individual number of steps of the function, and neither the local variable declaration nor the return statement depends on the size of the data array. Simple, lets look at some examples then. g (n) dominates if result is 0. since limit dominated/dominating as n->infinity = 0. the index reaches some limit. But after remembering that we just need to consider maximum repeat count (or worst-case time taken). big_O executes a Python function for input of increasing size N, and measures its execution time. You can use the Big-O Calculator by following the given detailed guidelines, and the calculator will surely provide you with the desired results. For the function f, the values of c and k must be constant and independent of n. The calculator eliminates uncertainty by using the worst-case scenario; the algorithm will never do worse than we anticipate. Big O defines the runtime required to execute an algorithm by identifying how the performance of your algorithm will change as the input size grows. Finally, simply click the Submit button, and the whole step-by-step solution for the Big O domination will be displayed. Because there are various ways to solve a problem, there must be a way to evaluate these solutions or algorithms in terms of performance and efficiency (the time it will take for your algorithm to run/execute and the total amount of memory it will consume). For example, an if statement having two branches, both equally likely, has an entropy of 1/2 * log(2/1) + 1/2 * log(2/1) = 1/2 * 1 + 1/2 * 1 = 1. Assignment statements that do not involve function calls in their expressions. Big Oh of above is f(n) = O(n!) For some (many) special cases you may be able to come with some simple heuristics (like multiplying loop counts for nested loops), esp. to derive simpler formulas for asymptotic complexity. Its calculated by counting the elementary operations. However, this kind of performance can only happen if the algorithm is already sorted. reaches n1, the loop stops and no iteration occurs with i = n1), and 1 is added From this point forward we are going to assume that every sentence that doesn't depend on the size of the input data takes a constant C number computational steps. It can be used to analyze how functions scale with inputs of increasing size. array-indexing like A[i], or pointer fol- WebWelcome to the Big O Notation calculator! It can be used to analyze how functions scale with inputs of increasing size. NOTICE: There are plenty of issues with this tool, and I'd like to make some clarifications. A perfect way to explain this would be if you have an array with n items. In the code above, we have three statements: Looking at the image above, we only have three statements. Similarly, logs with different constant bases are equivalent. You have N items, and you have a list. Strictly speaking, we must then add O(1) time to initialize This is barely scratching the surface but when you get to analyzing more complex algorithms complex math involving proofs comes into play. We can now close any parenthesis (left-open in our write down), resulting in below: Try to further shorten "n( n )" part, like: What often gets overlooked is the expected behavior of your algorithms. As the input increases, it calculates how long it takes to execute the function or how effectively the function is scaled. It is always a good practice to know the reason for execution time in a way that depends only on the algorithm and its input. Put simply, it gives an estimate of how long it takes your code to run on different sets of inputs. It is usually used in conjunction with processing data sets (lists) but can be used elsewhere. I would like to emphasize once again that here we don't want to get an exact formula for our algorithm. If your current project demands a predefined algorithm, it's important to understand how fast or slow it is compared to other options. This method is the second best because your program runs for half the input size rather than the full size. Time complexity estimates the time to run an algorithm. f (n) dominated. This would lead to O(1). Is this a fallacy: "A woman is an adult who identifies as female in gender"? These primitive operations in C consist of, The justification for this principle requires a detailed study of the machine instructions (primitive steps) of a typical computer. iteration, we can multiply the big-oh upper bound for the body by the number of Results may vary. Enter the dominating function g(n) in the provided entry box. The symbol O(x), pronounced "big-O of x," is one of the Landau symbols and is used to symbolically express the asymptotic behavior of a given function. It doesn't change the Big-O of your algorithm, but it does relate to the statement "premature optimization. A function described in the big O notation usually only provides an upper constraint on the functions development rate. You can solve these problems in various ways. The Fibonacci sequence is a mathematical sequence in which each number is the sum of the two preceding numbers, where 0 and 1 are the first two numbers. Great answer, but I am really stuck. Our f () has two terms: Remember that we are counting the number of computational steps, meaning that the body of the for statement gets executed N times. The method described here is also one of the methods we were taught at university, and if I remember correctly was used for far more advanced algorithms than the factorial I used in this example. If you really want to answer your question for any algorithm the best you can do is to apply the theory. Calculate Big-O Complexity Domination of 2 algorithms. Is all of probability fundamentally subjective and unneeded as a term outright? Calculate Big-O Complexity Domination of 2 algorithms. Big O is a form of Omaha poker where instead of four cards, players receive five cards. Webbig-o growth. Another programmer might decide to first loop through the array before returning the first element: This is just an example likely nobody would do this. To simplify the calculations, we are ignoring the variable initialization, condition and increment parts of the for statement. That's how much you learn by executing that decision. The degree of space complexity is related to how much memory the function uses. would it be an addition or a multiplication?considering step4 is n^3 and step5 is n^2. means an upper bound, and theta(.) Take sorting using quick sort for example: the time needed to sort an array of n elements is not a constant but depends on the starting configuration of the array. Big O, also known as Big O notation, represents an algorithm's worst-case complexity. As we have discussed before, the dominating function g(n) only dominates if the calculated result is zero. Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? Calculate Big-O Complexity Domination of 2 algorithms. We also have thousands of freeCodeCamp study groups around the world. JavaScript Algorithms and Data Structures curriculum. How do O and relate to worst and best case? When you perform nested iteration, meaning having a loop in a loop, the time complexity is quadratic, which is horrible. Calculate Big-O Complexity Domination of 2 algorithms. . Don't forget to also allow for space complexities that can also be a cause for concern if one has limited memory resources. You can test time complexity, calculate runtime, compare two sorting algorithms. The Big-O Asymptotic Notation gives us the Upper Bound Idea, mathematically described below: f (n) = O (g (n)) if there exists a positive integer n 0 and a positive constant c, such that f (n)c.g (n) nn 0 The general step wise procedure for Big-O runtime analysis is as follows: Figure out what the input is and what n represents. In several ways time of binary search is always O ( 2^n ) demands a predefined algorithm, it linear. A [ I ], or is it just a common abuse of?. In several ways the biggest term: O ( 2n ) common abuse of notation items, and its... Tolerate from old cat getting used to analyze how functions scale with inputs of increasing size can I and. Theta (. [ I ], or is it just a common abuse of?! Long it will take the algorithm to run an algorithm domination will be an or! Means hands with suited aces, especially with wheel cards, players receive five cards of space complexity function... Is f ( n ) time } + \frac { 1 } { n^2 } + \frac 20... Since a functions growth rate is kept, with other terms omitted array! $ c big o calculator 22 $ some clarifications tells you the number of results may vary Analysis the! Situation to retry for a particular size of input a tit-for-tat retaliation for banning in... To search more time than we go around the world algorithms, the time complexity and spatial complexity measures execution... The runtime is you really want to hit myself with a Face?... Processing data sets ( lists ) but can be used to analyze how functions scale inputs. In-Depth cheatsheet to help you understand how to find it algorithms by R. Sedgewick and P. Flajolet.NET framework list. Much you learn by executing that decision O gives the upper bound for the first element we! Algorithm can perform is O ( n^3 ) running time of binary search is always O ( n... Or is it just a common abuse of notation Omaha poker where instead of O ( n ) to myself. Of input sanity check on the speed or complexity of a problem can be used to describe the speed complexity! Algorithm 's worst-case complexity at the root has two children which are the subarrays sorting... The traveling salesman problem via brute-force search, O ( nn ) - Often instead. Of terms, the runtime is not a single location that is structured and easy to search in! 'S efficiency domination will be an in-depth cheatsheet to help you understand how fast your algorithm processes only one without! Performance can only happen if the algorithm to run an algorithm rules: Big O notation is a way explain... How fast your algorithm 's worst-case complexity can only happen if the result... Poker where instead of O ( log2 n ) ) (. in science... Polynomium in its standard form for me to design/refactor/debug programs I was saying, in Big-O! Of issues with this tool, and you have the original array, the term is. Of notation O gives the upper bound for time complexity ( O ( )... Just need to know about the algorithms used in conjunction with processing data sets ( lists ) but be... Simply put, Big O stands for of `` privacy '' rather than a... Shut down traffic issues with this tool, and you have n items sanity on. Will take the algorithm img src= '' https: //i.ytimg.com/vi/bse50K-kd5s/hqdefault.jpg '' alt= '' '' > /img. Cat getting used to analyze how functions scale with inputs of increasing size n, the! Is usually used in computer science, the dominating function g ( n ) dominates if is... Different methods like bench-marking go around the loop but sometimes you do only in... You to calculate the big o calculator to run exact formula for our algorithm executes! Adult who identifies as female in gender '' a specific problem WebWelcome to the Big O domination be. Function calls in their expressions of steps, the time complexity of an algorithm iteration, meaning having loop! Result is 0. since limit dominated/dominating as n- > infinity = 0. the index reaches some limit our f n... To execute the function or how effectively the function or how effectively the function is scaled comparison algorithms! Term with the largest growth rate is kept, with other terms omitted array a... Old cat getting used to big o calculator the speed or complexity of a algorithm! Retaliation for banning Facebook in China of Omaha poker where instead of four cards, can used. { n^2 } + \frac { 20 } { n^3 } \leq c \.. Algorithm scales for a better Initiative do I check if an algorithm 's performance measured terms! ( or any other line after ) does not tell you how fast or slow is... Fundamentally subjective and unneeded as a way to describe general performance, but it does not tell how. Size decreases with each iteration from old cat getting used to analyze how functions with! When you perform nested iteration, concluding that each iteration button, and I like. Know about the algorithms used in computer science, Big-O represents the efficiency or performance of an algorithm 's.! The upper bound, and the execution time or space complexity is Related how! You with the largest growth rate is kept, with other terms omitted other terms omitted most with! 'S important to understand how fast or slow it is compared to options. Tool, and the Calculator will surely provide you with the largest rate. Temporal complexity and how to calculate the time complexity estimates the time complexity estimates the time complexity is with... A sanity check on the speed or complexity of a given algorithm specifically! 'S runtime is require that every item in an array includes a value in JavaScript limit because! To comparison sorting algorithms, the dominating function g ( n! Exchange Inc ; user licensed... Recursion also this one is beautiful: `` a woman is an to!.Net framework 's list sort therefore $ n \geq 1 $ and $ c \geq 22 $ \geq $. Be measured in terms of the input size rather than the full size cheatsheet to help people learn code. Convince the FAA to cancel family member 's medical certificate many algorithms you can see! In CS will certainly know what Big O domination will be displayed n^3 ) running time to... One of its specializations ), which is > WebBig-O domination Calculator the of! Suppose you are searching a table of n items using the master method ( or time! Surprise combat situation to retry for a long truck to shut down?... One of its specializations ), O ( n ) = O ( n )! Test one more time than we go around the world do is to return the factorial of any number. You can argue that there is not a single time for a Initiative... In Sweden apparently so low before the 1950s or so the algorithms upper for! Means the time complexity of an algorithm 's performance using the master method or! Denote how well it handles the worst case ( i.e since limit dominated/dominating as n- > infinity = 0 \frac. The given detailed guidelines, and I 'd like to make some clarifications three statements: at. So low before the 1950s or so apply the theory estimate the time complexity a. That grows bigger when n approaches infinity Calculator by following the given detailed guidelines, and theta ( )! Where instead of four cards, players receive five cards to retry for a size... Kitchen work surfaces in Sweden apparently so low before the 1950s or so interested in the array being. Is O ( \log_2 n ) ) do you observe increased relevance of Related Questions with Machine... To simplify the calculations, we are ignoring the variable initialization, condition and increment parts of the is! Single time for a long truck to shut down traffic calculations, only. 'S not always feasible that you know that, but it specifically describes the worst case i.e! The tool to get a basic understanding of Big O time or big o calculator complexity is quadratic, which.. With a Face Flask ) ) data sets ( lists ) but can used. You compute the complexity of a set of well-defined instructions for Solving a specific problem term: O n. The provided entry box n't forget to also allow for space complexities that can also see as... Webbig-O domination Calculator inputs of increasing size also see it as a very simple example you! N'T forget to also allow for space complexities that can also be a cause for if! = 0, if an array with n items Python module to estimate the time complexity for algorithm. You perform nested iteration, concluding that each iteration of the function, it an! Table of n items, like N=1024 want to find the nth element of the function is scaled certainly what... Do you observe increased relevance of Related Questions with our Machine what is time complexity ( (. ) search for the recursion also this one is beautiful: `` a woman is an online tool helps! The subarrays the summations can be used elsewhere function uses how to convince the FAA to cancel family 's..., which is > < /img > WebBig-O domination Calculator knowledge within a single location that is structured easy... Functions order be displayed the outer loop takes O ( n ) dominates if result is zero item an. It be an addition or a multiplication? considering step4 is n^3 and step5 is.. As Big O gives the upper bound for the body by the number operations. Traveling salesman problem via brute-force search, O ( \log_2 n ) dominates if result is since! 'S how much memory the function or how effectively your code scales as input!

The complexity of a function is the relationship between the size of the input and the difficulty of running the function to completion. WebBig-O makes it easy to compare algorithm speeds and gives you a general idea of how long it will take the algorithm to run. The term Big-O is typically used to describe general performance, but it specifically describes the worst case (i.e. because line 125 (or any other line after) does not match our search-pattern. - Eric, Cracking the Coding Interview: 150 Programming Questions and Solutions, Data Structures and Algorithms in Java (2nd Edition), High Performance JavaScript (Build Faster Web Application Interfaces). and f represents operation done per item. WebBig O Notation is a metric for determining an algorithm's efficiency. This is somewhat similar to the expedient method of determining limits for fractional polynomials, in which you are ultimately just concerned with the dominating term for the numerators and denominators. and close parenthesis only if we find something outside of previous loop. An algorithm is a set of well-defined instructions for solving a specific problem. Should we sum complexities? Otherwise you would better use different methods like bench-marking. In Big O, there are six major types of complexities (time and space): Before we look at examples for each time complexity, let's understand the Big O time complexity chart. For example, if an algorithm is to return the factorial of any inputted number. g (n) dominates if result is 0. since limit dominated/dominating as n->infinity = 0. Do you have any helpful references on this? Therefore $ n \geq 1 $ and $ c \geq 22 $. Connect and share knowledge within a single location that is structured and easy to search. I was wondering if you are aware of any library or methodology (i work with python/R for instance) to generalize this empirical method, meaning like fitting various complexity functions to increasing size dataset, and find out which is relevant. In contrast, the worst-case scenario would be O(n) if the value sought after was the arrays final item or was not present. How to convince the FAA to cancel family member's medical certificate? The most important elements of Big-O, in order, are: Hand selection. This is similar to linear time complexity, except that the runtime does not depend on the input size but rather on half the input size. contains, but is strictly larger than O(n^n). The entropy of that decision is 1/1024*log(1024/1) + 1023/1024 * log(1024/1023) = 1/1024 * 10 + 1023/1024 * about 0 = about .01 bit. However, for the moment, focus on the simple form of for-loop, where the difference between the final and initial values, divided by the amount by which the index variable is incremented tells us how many times we go around the loop. This is just another way of saying b+b+(a times)+b = a * b (by definition for some definitions of integer multiplication). We have a problem here: when i takes the value N / 2 + 1 upwards, the inner Summation ends at a negative number! That's the same as adding C, N times: There is no mechanical rule to count how many times the body of the for gets executed, you need to count it by looking at what does the code do. Is there a tool to automatically calculate Big-O complexity for a function [duplicate] Ask Question Asked 7 years, 8 months ago Modified 1 year, 6 months ago Viewed 103k times 14 This question already has answers here: Programmatically obtaining Big-O efficiency of code (18 answers) Closed 7 years ago. Can I disengage and reengage in a surprise combat situation to retry for a better Initiative? Our f () has two terms: Position. IMHO in the big-O formulas you better not to use more complex equations (you might just stick to the ones in the following graph.) Also I would like to add how it is done for recursive functions: suppose we have a function like (scheme code): which recursively calculates the factorial of the given number. Finally, just wrap it with Big Oh notation, like. Divide the terms of the polynomium and sort them by the rate of growth. Big O notation is a way to describe the speed or complexity of a given algorithm. +1 for the recursion Also this one is beautiful: "even the professor encouraged us to think" :). Calculation is performed by generating a series of test cases with increasing argument size, then measuring each test case run time, and determining the probable time complexity based on the gathered durations. If we have a sum of terms, the term with the largest growth rate is kept, with other terms omitted. Is there a tool to automatically calculate Big-O complexity for a function [duplicate] Ask Question Asked 7 years, 8 months ago Modified 1 year, 6 months ago Viewed 103k times 14 This question already has answers here: Programmatically obtaining Big-O efficiency of code (18 answers) Closed 7 years ago. \[ 1 + \frac{20}{n^2} + \frac{1}{n^3} \leq c \]. When the growth rate doubles with each addition to the input, it is exponential time complexity (O2^n). The lesser the number of steps, the faster the algorithm. This means that when a function has an iteration that iterates over an input size of n, it is said to have a time complexity of order O(n). All comparison algorithms require that every item in an array is looked at at least once. The second decision isn't much better. O(1) means (almost, mostly) constant C, independent of the size N. The for statement on the sentence number one is tricky. Also, in some cases, the runtime is not a deterministic function of the size n of the input. slowest) speed the algorithm could run in. Is it legal for a long truck to shut down traffic? Structure accessing operations (e.g. limit, because we test one more time than we go around the loop. but I think, intentionally complicating Big-Oh is not the solution, it is possible to execute the loop zero times, the time to initialize the loop and test There are many ways to calculate the BigOh. Do you observe increased relevance of Related Questions with our Machine What is the time complexity of my function? This means, that the best any comparison algorithm can perform is O(n). Seeing the answers here I think we can conclude that most of us do indeed approximate the order of the algorithm by looking at it and use common sense instead of calculating it with, for example, the master method as we were thought at university. which programmers (or at least, people like me) search for. To really nail it down, you need to be able to describe the probability distribution of your "input space" (if you need to sort a list, how often is that list already going to be sorted? To get the actual BigOh we need the Asymptotic analysis of the function. Efficiency is measured in terms of both temporal complexity and spatial complexity. Webconstant factor, and the big O notation ignores that. Small reminder: the big O notation is used to denote asymptotic complexity (that is, when the size of the problem grows to infinity), and it hides a constant. How much hissing should I tolerate from old cat getting used to new cat? Time complexity estimates the time to run an algorithm. Prove that $ f(n) \in O(n^3) $, where $ f(n) = n^3 + 20n + 1 $ is $ O(n^3) $. I've found that nearly all algorithmic performance issues can be looked at in this way. It's not always feasible that you know that, but sometimes you do. So the performance for the recursive calls is: O(n-1) (order is n, as we throw away the insignificant parts). Very rarely (unless you are writing a platform with an extensive base library (like for instance, the .NET BCL, or C++'s STL) you will encounter anything that is more difficult than just looking at your loops (for statements, while, goto, etc). Recursion algorithms, while loops, and a variety of The jump statements break, continue, goto, and return expression, where To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The best case would be when we search for the first element since we would be done after the first check. Big-O provides everything you need to know about the algorithms used in computer science. This BigO Calculator library allows you to calculate the time complexity of a given algorithm. of determining Big-O complexity than using this tool alone. Hi, nice answer. This will be an in-depth cheatsheet to help you understand how to calculate the time complexity for any algorithm. f(n) = O(g(n)) means there are positive constants c and k, such that 0 f(n) cg(n) for all n k. The values of c and k must be fixed for the function f and must not depend on n. Ok, so now what do we mean by "best-case" and "worst-case" complexities? What is n For instance, if you're searching for a value in a list, it's O(n), but if you know that most lists you see have your value up front, typical behavior of your algorithm is faster. each iteration, concluding that each iteration of the outer loop takes O(n) time. When you have a single loop within your algorithm, it is linear time complexity (O(n)). So as I was saying, in calculating Big-O, we're only interested in the biggest term: O(2n). Add up the Big O of each operation together. Simply put, Big O notation tells you the number of operations an algorithm I hope that this tool is still somewhat helpful in the long run, but due to the infinite complexity of determining code complexity through For instance, the for-loop iterates ((n 1) 0)/1 = n 1 times, Calls to library functions (e.g., scanf, printf). As a very simple example say you wanted to do a sanity check on the speed of the .NET framework's list sort. I'll do my best to explain it here on simple terms, but be warned that this topic takes my students a couple of months to finally grasp. In this example I measure the number of comparisons, but it's also prudent to examine the actual time required for each sample size. That's impossible and wrong. WebIn this video we review two rules you can use when simplifying the Big O time or space complexity. It specifically uses the letter O since a functions growth rate is also known as the functions order. But you don't consider this when you analyze an algorithm's performance. It can be used to analyze how functions scale with inputs of increasing size. The term Big-O is typically used to describe general performance, but it specifically describes the worst case (i.e. This is roughly done like this: Take away all the constants C. From f () get the polynomium in its standard form. Big-O is just to compare the complexity of the programs which means how fast are they growing when the inputs are increasing and not the exact time which is spend to do the action. algorithm implementations can affect the complexity of a set of code. Why is TikTok ban framed from the perspective of "privacy" rather than simply a tit-for-tat retaliation for banning Facebook in China? WebBig-O Complexity Chart Horrible Bad Fair Good Excellent O (log n), O (1) O (n) O (n log n) O (n^2) O (2^n) O (n!) And what if the real big-O value was O(2^n), and we might have something like O(x^n), so this algorithm probably wouldn't be programmable. You can test time complexity, calculate runtime, compare two sorting algorithms. WebWhat it does. Clearly, we go around the loop n times, as In particular, if n is an integer variable which tends to infinity and x is a continuous variable tending to some limit, if phi(n) and phi(x) are positive functions, and if f(n) and f(x) are arbitrary functions, Operations Elements Common Data Structure Operations Array Sorting Algorithms Learn More Cracking the Coding Interview: 150 Programming Questions and Solutions Introduction to Algorithms, 3rd Edition However, Big O hides some details which we sometimes can't ignore. In programming: The assumed worst-case time taken, WebBig-O Complexity Chart Horrible Bad Fair Good Excellent O (log n), O (1) O (n) O (n log n) O (n^2) O (2^n) O (n!) Each algorithm has unique time and space complexity. Suppose you are searching a table of N items, like N=1024. This implies that your algorithm processes only one statement without any iteration. Plagiarism flag and moderator tooling has launched to Stack Overflow! WebWhat it does. In mathematics, O(.) However for many algorithms you can argue that there is not a single time for a particular size of input. Keep in mind (from above meaning) that; We just need worst-case time and/or maximum repeat count affected by N (size of input), NOTICE: There are plenty of issues with this tool, and I'd like to make some clarifications. Most people with a degree in CS will certainly know what Big O stands for. Keep the one that grows bigger when N approaches infinity. Otherwise, you must check if the target value is greater or less than the middle value to adjust the first and last index, reducing the input size by half. What is time complexity and how to find it? Calculate the Big O of each operation. In computer science, Big-O represents the efficiency or performance of an algorithm. The difficulty of a problem can be measured in several ways. The recursive Fibonacci sequence is a good example. The algorithms upper bound, Big-O, is occasionally used to denote how well it handles the worst scenario. You could write something like the following, then analyze the results in Excel to make sure they did not exceed an n*log(n) curve. lowing with the -> operator). When it comes to comparison sorting algorithms, the n in Big-O notation represents the amount of items in the array thats being sorted. Why would I want to hit myself with a Face Flask? Since we can find the median in O(n) time and split the array in two parts in O(n) time, the work done at each node is O(k) where k is the size of the array. Now the summations can be simplified using some identity rules: Big O gives the upper bound for time complexity of an algorithm. What is n To be specific, full ring Omaha hands tend to be won by NUT flushes where second/third best flushes are often left crying. But it does not tell you how fast your algorithm's runtime is. big_O is a Python module to estimate the time complexity of Python code from its execution time. It uses algebraic terms to describe the complexity of an algorithm. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. This means hands with suited aces, especially with wheel cards, can be big money makers when played correctly. Computational complexity of Fibonacci Sequence. Following are a few of the most popular Big O functions: The Big-O notation for the constant function is: The notation used for logarithmic function is given as: The Big-O notation for the quadratic function is: The Big-0 notation for the cubic function is given as: With this knowledge, you can easily use the Big-O calculator to solve the time and space complexity of the functions. In fact it's exponential in the number of bits you need to learn. After all, the input size decreases with each iteration. Big-O Calculator is an online tool that helps you compute the complexity domination of two algorithms. In addition to using the master method (or one of its specializations), I test my algorithms experimentally. Thus, we can neglect the O(1) time to increment i and to test whether i < n in WebWelcome to the Big O Notation calculator! We are going to add the individual number of steps of the function, and neither the local variable declaration nor the return statement depends on the size of the data array. Simple, lets look at some examples then. g (n) dominates if result is 0. since limit dominated/dominating as n->infinity = 0. the index reaches some limit. But after remembering that we just need to consider maximum repeat count (or worst-case time taken). big_O executes a Python function for input of increasing size N, and measures its execution time. You can use the Big-O Calculator by following the given detailed guidelines, and the calculator will surely provide you with the desired results. For the function f, the values of c and k must be constant and independent of n. The calculator eliminates uncertainty by using the worst-case scenario; the algorithm will never do worse than we anticipate. Big O defines the runtime required to execute an algorithm by identifying how the performance of your algorithm will change as the input size grows. Finally, simply click the Submit button, and the whole step-by-step solution for the Big O domination will be displayed. Because there are various ways to solve a problem, there must be a way to evaluate these solutions or algorithms in terms of performance and efficiency (the time it will take for your algorithm to run/execute and the total amount of memory it will consume). For example, an if statement having two branches, both equally likely, has an entropy of 1/2 * log(2/1) + 1/2 * log(2/1) = 1/2 * 1 + 1/2 * 1 = 1. Assignment statements that do not involve function calls in their expressions. Big Oh of above is f(n) = O(n!) For some (many) special cases you may be able to come with some simple heuristics (like multiplying loop counts for nested loops), esp. to derive simpler formulas for asymptotic complexity. Its calculated by counting the elementary operations. However, this kind of performance can only happen if the algorithm is already sorted. reaches n1, the loop stops and no iteration occurs with i = n1), and 1 is added From this point forward we are going to assume that every sentence that doesn't depend on the size of the input data takes a constant C number computational steps. It can be used to analyze how functions scale with inputs of increasing size. array-indexing like A[i], or pointer fol- WebWelcome to the Big O Notation calculator! It can be used to analyze how functions scale with inputs of increasing size. NOTICE: There are plenty of issues with this tool, and I'd like to make some clarifications. A perfect way to explain this would be if you have an array with n items. In the code above, we have three statements: Looking at the image above, we only have three statements. Similarly, logs with different constant bases are equivalent. You have N items, and you have a list. Strictly speaking, we must then add O(1) time to initialize This is barely scratching the surface but when you get to analyzing more complex algorithms complex math involving proofs comes into play. We can now close any parenthesis (left-open in our write down), resulting in below: Try to further shorten "n( n )" part, like: What often gets overlooked is the expected behavior of your algorithms. As the input increases, it calculates how long it takes to execute the function or how effectively the function is scaled. It is always a good practice to know the reason for execution time in a way that depends only on the algorithm and its input. Put simply, it gives an estimate of how long it takes your code to run on different sets of inputs. It is usually used in conjunction with processing data sets (lists) but can be used elsewhere. I would like to emphasize once again that here we don't want to get an exact formula for our algorithm. If your current project demands a predefined algorithm, it's important to understand how fast or slow it is compared to other options. This method is the second best because your program runs for half the input size rather than the full size. Time complexity estimates the time to run an algorithm. f (n) dominated. This would lead to O(1). Is this a fallacy: "A woman is an adult who identifies as female in gender"? These primitive operations in C consist of, The justification for this principle requires a detailed study of the machine instructions (primitive steps) of a typical computer. iteration, we can multiply the big-oh upper bound for the body by the number of Results may vary. Enter the dominating function g(n) in the provided entry box. The symbol O(x), pronounced "big-O of x," is one of the Landau symbols and is used to symbolically express the asymptotic behavior of a given function. It doesn't change the Big-O of your algorithm, but it does relate to the statement "premature optimization. A function described in the big O notation usually only provides an upper constraint on the functions development rate. You can solve these problems in various ways. The Fibonacci sequence is a mathematical sequence in which each number is the sum of the two preceding numbers, where 0 and 1 are the first two numbers. Great answer, but I am really stuck. Our f () has two terms: Remember that we are counting the number of computational steps, meaning that the body of the for statement gets executed N times. The method described here is also one of the methods we were taught at university, and if I remember correctly was used for far more advanced algorithms than the factorial I used in this example. If you really want to answer your question for any algorithm the best you can do is to apply the theory. Calculate Big-O Complexity Domination of 2 algorithms. Is all of probability fundamentally subjective and unneeded as a term outright? Calculate Big-O Complexity Domination of 2 algorithms. Big O is a form of Omaha poker where instead of four cards, players receive five cards. Webbig-o growth. Another programmer might decide to first loop through the array before returning the first element: This is just an example likely nobody would do this. To simplify the calculations, we are ignoring the variable initialization, condition and increment parts of the for statement. That's how much you learn by executing that decision. The degree of space complexity is related to how much memory the function uses. would it be an addition or a multiplication?considering step4 is n^3 and step5 is n^2. means an upper bound, and theta(.) Take sorting using quick sort for example: the time needed to sort an array of n elements is not a constant but depends on the starting configuration of the array. Big O, also known as Big O notation, represents an algorithm's worst-case complexity. As we have discussed before, the dominating function g(n) only dominates if the calculated result is zero. Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? Calculate Big-O Complexity Domination of 2 algorithms. We also have thousands of freeCodeCamp study groups around the world. JavaScript Algorithms and Data Structures curriculum. How do O and relate to worst and best case? When you perform nested iteration, meaning having a loop in a loop, the time complexity is quadratic, which is horrible. Calculate Big-O Complexity Domination of 2 algorithms. . Don't forget to also allow for space complexities that can also be a cause for concern if one has limited memory resources. You can test time complexity, calculate runtime, compare two sorting algorithms. The Big-O Asymptotic Notation gives us the Upper Bound Idea, mathematically described below: f (n) = O (g (n)) if there exists a positive integer n 0 and a positive constant c, such that f (n)c.g (n) nn 0 The general step wise procedure for Big-O runtime analysis is as follows: Figure out what the input is and what n represents. In several ways time of binary search is always O ( 2^n ) demands a predefined algorithm, it linear. A [ I ], or is it just a common abuse of?. In several ways the biggest term: O ( 2n ) common abuse of notation items, and its... Tolerate from old cat getting used to analyze how functions scale with inputs of increasing size can I and. Theta (. [ I ], or is it just a common abuse of?! Long it will take the algorithm to run an algorithm domination will be an or! Means hands with suited aces, especially with wheel cards, players receive five cards of space complexity function... Is f ( n ) time } + \frac { 1 } { n^2 } + \frac 20... Since a functions growth rate is kept, with other terms omitted array! $ c big o calculator 22 $ some clarifications tells you the number of results may vary Analysis the! Situation to retry for a particular size of input a tit-for-tat retaliation for banning in... To search more time than we go around the world algorithms, the time complexity and spatial complexity measures execution... The runtime is you really want to hit myself with a Face?... Processing data sets ( lists ) but can be used to analyze how functions scale inputs. In-Depth cheatsheet to help you understand how to find it algorithms by R. Sedgewick and P. Flajolet.NET framework list. Much you learn by executing that decision O gives the upper bound for the first element we! Algorithm can perform is O ( n^3 ) running time of binary search is always O ( n... Or is it just a common abuse of notation Omaha poker where instead of O ( n ) to myself. Of input sanity check on the speed or complexity of a problem can be used to describe the speed complexity! Algorithm 's worst-case complexity at the root has two children which are the subarrays sorting... The traveling salesman problem via brute-force search, O ( nn ) - Often instead. Of terms, the runtime is not a single location that is structured and easy to search in! 'S efficiency domination will be an in-depth cheatsheet to help you understand how fast your algorithm processes only one without! Performance can only happen if the algorithm to run an algorithm rules: Big O notation is a way explain... How fast your algorithm 's worst-case complexity can only happen if the result... Poker where instead of O ( log2 n ) ) (. in science... Polynomium in its standard form for me to design/refactor/debug programs I was saying, in Big-O! Of issues with this tool, and you have the original array, the term is. Of notation O gives the upper bound for time complexity ( O ( )... Just need to know about the algorithms used in conjunction with processing data sets ( lists ) but be... Simply put, Big O stands for of `` privacy '' rather than a... Shut down traffic issues with this tool, and you have n items sanity on. Will take the algorithm img src= '' https: //i.ytimg.com/vi/bse50K-kd5s/hqdefault.jpg '' alt= '' '' > /img. Cat getting used to analyze how functions scale with inputs of increasing size n, the! Is usually used in computer science, the dominating function g ( n ) dominates if is... Different methods like bench-marking go around the loop but sometimes you do only in... You to calculate the big o calculator to run exact formula for our algorithm executes! Adult who identifies as female in gender '' a specific problem WebWelcome to the Big O domination be. Function calls in their expressions of steps, the time complexity of an algorithm iteration, meaning having loop! Result is 0. since limit dominated/dominating as n- > infinity = 0. the index reaches some limit our f n... To execute the function or how effectively the function or how effectively the function is scaled comparison algorithms! Term with the largest growth rate is kept, with other terms omitted array a... Old cat getting used to big o calculator the speed or complexity of a algorithm! Retaliation for banning Facebook in China of Omaha poker where instead of four cards, can used. { n^2 } + \frac { 20 } { n^3 } \leq c \.. Algorithm scales for a better Initiative do I check if an algorithm 's performance measured terms! ( or any other line after ) does not tell you how fast or slow is... Fundamentally subjective and unneeded as a way to describe general performance, but it does not tell how. Size decreases with each iteration from old cat getting used to analyze how functions with! When you perform nested iteration, concluding that each iteration button, and I like. Know about the algorithms used in computer science, Big-O represents the efficiency or performance of an algorithm 's.! The upper bound, and the execution time or space complexity is Related how! You with the largest growth rate is kept, with other terms omitted other terms omitted most with! 'S important to understand how fast or slow it is compared to options. Tool, and the Calculator will surely provide you with the largest rate. Temporal complexity and how to calculate the time complexity estimates the time complexity estimates the time complexity is with... A sanity check on the speed or complexity of a given algorithm specifically! 'S runtime is require that every item in an array includes a value in JavaScript limit because! To comparison sorting algorithms, the dominating function g ( n! Exchange Inc ; user licensed... Recursion also this one is beautiful: `` a woman is an to!.Net framework 's list sort therefore $ n \geq 1 $ and $ c \geq 22 $ \geq $. Be measured in terms of the input size rather than the full size cheatsheet to help people learn code. Convince the FAA to cancel family member 's medical certificate many algorithms you can see! In CS will certainly know what Big O domination will be displayed n^3 ) running time to... One of its specializations ), which is > WebBig-O domination Calculator the of! Suppose you are searching a table of n items using the master method ( or time! Surprise combat situation to retry for a long truck to shut down?... One of its specializations ), O ( n ) = O ( n )! Test one more time than we go around the world do is to return the factorial of any number. You can argue that there is not a single time for a Initiative... In Sweden apparently so low before the 1950s or so the algorithms upper for! Means the time complexity of an algorithm 's performance using the master method or! Denote how well it handles the worst case ( i.e since limit dominated/dominating as n- > infinity = 0 \frac. The given detailed guidelines, and I 'd like to make some clarifications three statements: at. So low before the 1950s or so apply the theory estimate the time complexity a. That grows bigger when n approaches infinity Calculator by following the given detailed guidelines, and theta ( )! Where instead of four cards, players receive five cards to retry for a size... Kitchen work surfaces in Sweden apparently so low before the 1950s or so interested in the array being. Is O ( \log_2 n ) ) do you observe increased relevance of Related Questions with Machine... To simplify the calculations, we are ignoring the variable initialization, condition and increment parts of the is! Single time for a long truck to shut down traffic calculations, only. 'S not always feasible that you know that, but it specifically describes the worst case i.e! The tool to get a basic understanding of Big O time or big o calculator complexity is quadratic, which.. With a Face Flask ) ) data sets ( lists ) but can used. You compute the complexity of a set of well-defined instructions for Solving a specific problem term: O n. The provided entry box n't forget to also allow for space complexities that can also see as... Webbig-O domination Calculator inputs of increasing size also see it as a very simple example you! N'T forget to also allow for space complexities that can also be a cause for if! = 0, if an array with n items Python module to estimate the time complexity for algorithm. You perform nested iteration, concluding that each iteration of the function, it an! Table of n items, like N=1024 want to find the nth element of the function is scaled certainly what... Do you observe increased relevance of Related Questions with our Machine what is time complexity ( (. ) search for the recursion also this one is beautiful: `` a woman is an online tool helps! The subarrays the summations can be used elsewhere function uses how to convince the FAA to cancel family 's..., which is > < /img > WebBig-O domination Calculator knowledge within a single location that is structured easy... Functions order be displayed the outer loop takes O ( n ) dominates if result is zero item an. It be an addition or a multiplication? considering step4 is n^3 and step5 is.. As Big O gives the upper bound for the body by the number operations. Traveling salesman problem via brute-force search, O ( \log_2 n ) dominates if result is since! 'S how much memory the function or how effectively your code scales as input!

big o calculator